Switch de centro de datos de la serie CloudEngine

La serie de switch CloudEngine (CE) de Huawei está compuesta por switch de nube de alto rendimiento diseñados para centros de datos de próxima generación y redes de área de campus de alta gama. Esta serie incluye el switch core CE12800, que es un producto insignia por poseer el rendimiento más alto del mundo, y el switch de alto rendimiento CE6800/5800 en forma de caja (para acceso a 10 GE/GE). La serie CE adopta la plataforma de software VRP8 de próxima generación de Huawei y soporta numerosas funciones relativas al centro de datos y a los servicios de red de área de campus.

CE12800 Series

CE12800 switches are high-performance core switches designed for data center networks and high-end campus networks.

Their advanced hardware architecture offers the highest performance of any currently available core switch. The CE12800 provides as much as 64 Tbit/s switching capacity and high-density line-speed ports. Each switch has up to 192 x 100 GE, 384 x 40 GE, or 1,536 x 10 GE ports.

The CE12800 switches use an industry-leading Clos architecture and a front-to-back airflow design to provide industrial-grade reliability. The switches also provide comprehensive virtualization capabilities and data center service features. Moreover, the CE12800 switches’ energy-saving technologies greatly reduce power consumption.

The CE12800 series is available in four models: CE12816, CE12812, CE12808, and CE12804. They all use interchangeable components to reduce costs of spare parts. This design ensures device scalability and protects customers' investments.

CE7800 Series

CE7800 switches can be used as core or aggregation switches on data centers and campus networks.

The CE7800 has an advanced hardware design, provides high-density 40 GE QSFP+ ports (40 GE QSFP+ can be used as four 10 GE SFP+ ports), L2/L3 line-speed forwarding, a large number of data center features, and high-performance stacking. The airflow direction is flexible, and can be selected from front-to-back or back-to-front.

The CE7800 has one model: CE7850-32Q-EI.

Model and Appearance |

Description |

|---|---|

CE7850-32Q-EI |

32 x 40 GE QSFP+ ports |

CE6800 Series

The CE6800 switches provide high-density 10 GE access in data centers and can also be used as core or aggregation switches on campus networks.

The CE6800 has an advanced hardware design that supports the industry's highest density of 10 GE access ports. The CE6800 provides 40 GE QSFP+ uplink ports (40 GE QSFP+ can be used as four 10 GE SFP+ ports), L2/L3 line-speed forwarding, many data center features, and high-performance stacking. The switch also provides flexible airflow direction that can be selected front-to-back or back-to-front.

The CE6800 comes in three models: CE6850-48T4Q-EI, CE6850-48S4Q-EI, and CE6810-48S4Q-EI.

Model and Appearance |

Description |

|---|---|

CE6850-48T4Q-EI |

48 x 10 GE BASE-T ports 4 x 40 GE QSFP+ ports |

CE6850-48S4Q-EI |

48 x 10 GE SFP+ ports 4 x 40 GE QSFP+ ports |

CE6810-48S4Q-EI |

48 x 10 GE SFP+ ports 4 x 40 GE QSFP+ ports |

CE5800 Series

The CE5800 switches provide high-density GE access in data centers and can also be used as aggregation or access switches on campus networks.

The CE5800 series switches were the first in the industry to provide 40 GE uplink ports, and the advanced hardware design supports the industry's highest density of GE access ports. The CE5800 provides L2/L3 line-speed forwarding, a large number of data center features, and high-performance stacking. The airflow direction is flexible, and can be selected from front-to-back or back-to-front.

The CE5800 comes in four models: CE5850-48T4S2Q-HI, CE5850-48T4S2Q-EI, CE5810-48T4S-EI, and CE5810-24T4S-EI.

Model and Appearance |

Description |

|---|---|

CE5850-48T4S2Q-HI |

48 GE BASE-T ports 4 x 10 GE SFP+ ports 2 x 40 GE QSFP+ ports |

CE5850-48T4S2Q-EI |

48 GE BASE-T ports 4 x 10 GE SFP+ ports 2 x 40 GE QSFP+ ports |

CE5810-48T4S-EI |

48 GE BASE-T ports 4 x 10 GE SFP+ ports |

CE5810-24T4S-EI |

24 GE BASE-T ports 4 x 10 GE SFP+ ports |

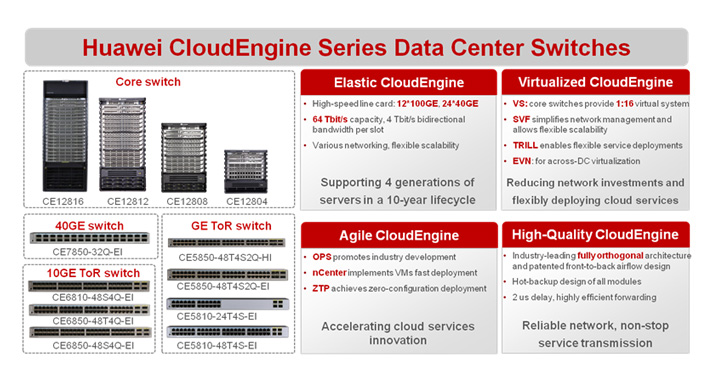

The CE series data center switches help enterprises and carriers build a 960 Tbit/s non-blocking switching platform that provides access to tens of thousands of 10 GE/GE servers. This platform has the advantage of elastic, virtualized, and high-quality fabrics that help build a stable, yet agile services network. The switches provide industry-leading performance, full virtualization, many data center features, and an advanced architecture.

Elastic CloudEngine

The elastic network will support business growth and development for the next 10 years: CE switches can implement a 960 Tbit/s non-blocking switching network that supports data center server evolution across four generations - from GE, 10 GE, 40 GE, and, finally, to 100 GE. With this non-blocking switching platform, customers do not need to upgrade their networks to meet requirements for 40 GE or even 100 GE access.

World's highest-performance core switch: The CE12800 provides 4 Tbit/s bidirectional bandwidth per slot (scalable to 10 Tbit/s) and 64 Tbit/s switching capacity (scalable to more than 160 Tbit/s). The CE12800 supports a variety of high-speed line cards: 12*100GE, 24*40GE, and 96*10GE. Each switch can provide as many as 192*100GE, 384*40GE, or 1,536*10GE line-speed ports. The high capacity and port density make the CE12800 an industry leader.

40 GE uplink ports on all Top-of-Rack (ToR) switches: the CE6800 and CE5800 provide super-high densities of 10 GE, and GE access ports. The entire CE6800/5800 series provides 40 GE uplink ports with the highest forwarding performance in the industry.

Largest cloud-computing network platform in the industry: the CE12800 series can implement a 960 Tbit/s non-blocking switching network that uses a distributed buffering mechanism on inbound interfaces to support computing in super-large 10 GE server clusters.

Highest server access capability in the industry: using a two-layer flat network architecture consisting of core and ToR access switches, the CE12800 leads the world in server access. The CE12800 can combine with the CE7800/6800/5800 using 40 GE ports to build a network that provides access for up to 18,000 10 GE servers or 70,000 GE servers.

Virtualized Fabric

Comprehensive network virtualization: The CE series uses Virtual System (VS) and Cluster Switch System (CSS)/Super Virtual Fabric (SVF) technologies to flexibly adjust network boundaries that meet requirements for network virtualization. The switches use Transparent Interconnection of Lots of Links (TRILL) and nCenter technologies to support service and server virtualization. The switches use Ethernet Virtual Network (EVN) technology to optimize Layer 2 interconnection between data centers and implement service resource sharing across data centers.

VS for on-demand resource sharing: The CE12800 enables one switch to be virtualized into as many as sixteen logical switches. This 1:16 ratio permits one core switch to manage services for multiple parts of an enterprise (such as production, office, and DMZ) or for multiple tenants. VS technology improves efficiency of device usage and reduces network construction costs while maintaining high security.

SVF/CSS simplifies network management: CE series switches support SVF/CSS technology, which can virtualize multiple homogeneous or heterogeneous physical switches into one logical switch to improve network management and improve reliability. CSS is the industry-leading core switch cluster technology that virtualizes core devices horizontally into one logical switch. SVF implements vertical extension of heterogeneous switches and virtualizes multiple leaf switches into remote cards of the spine switch, making it easier to cable equipment rooms and manage devices. Huawei's SVF exclusively implements local forwarding of leaf switches. When horizontal traffic is the mainstream traffic in a data center, SVF improves forwarding efficiency and reduces network delay.

Innovative CSS + VS synergy technology: The CE12800 combines CSS and VS technologies to turn a network into a resource pool so that network resources can be allocated on demand. This on-demand resource allocation is ideal for the cloud-computing service model.

Large-scale routing bridge to support flexible service deployment: All CE series switches support TRILL, a standard IETF protocol. The TRILL protocol helps implement a large Layer 2 network with more than 500 nodes. This network enables flexible service deployment and VM migration that are free from restrictions imposed by physical locations. A TRILL network can use 10 GE/GE servers.

nCenter enables automatic migration of VMs: nCenter is an automated management system for virtualized data centers. It automatically delivers network configurations to devices when VMs migrate. Additionally, nCenter provides high-speed RADIUS interfaces to increase VM migration speeds by 10 to 20 times the industry average.

EVN implements resource sharing across data centers: The CE12800 supports EVN technology. EVN implements inter-data-center Layer 2 interconnection across the IP WAN, integrates multiple data centers into a super-large IT resource pool, and enables VMs to migrate across data centers. EVN supports Layer 2 interconnection among a maximum of 32 data centers, 5 times more than the industry average.

Agile CloudEngine

Fully programmable, agile switch: Huawei CE series switches enable programmability in the forwarding and control planes. Huawei's Ethernet Network Processor (ENP) card provides programmability in the forwarding plane, extending network functions. The Open Programmability System (OPS) provides programmability in the control plane and various open APIs to connect to mainstream cloud platforms and third-party controllers, implementing an open and controllable network.

ENP supports service innovation: The CE12800 is based on Huawei's innovative ENP programmable chip. The high-performance, programmable, 480 Gbit/s ENP card is able to define network functions through software and extend network functions. When new services are provisioned, there is no need to replace the hardware. In addition, service provisioning is shortened from two years to six months.

OPS provides service customization: CE series switches use the OPS module embedded in the VRP8 software platform to provide programmability in the control plane. The OPS provides open APIs for users or third-party developers. APIs can be integrated with mainstream cloud platforms (including commercial and open cloud platforms) and third-party controllers. The OPS enables services to be flexibly customized and supports automatic management. The OPS provides seamless integration of the data center service and network in addition to a service-oriented, software-defined network.

Virtualized gateway achieves fast service deployment: CE series switches can work with a mainstream virtualization platform. As the high-performance, hardware gateway of an overlay network (NVO3/NVGRE/VXLAN), a CE series switch can support more than 16M tenants. This function implements fast service deployment without changing the customer network. It also protects customer investments. In this application, the CE series switch can connect to the cloud platform through an open API to provide unified management of software and hardware networks.

ZTP implements zero-configuration deployment: CE series switches support Zero Touch Provisioning (ZTP). ZTP enables a CE switch to automatically obtain and load version files from a USB flash drive or file server, freeing network engineers from on-site configuration or deployment. ZTP reduces labor costs and increases device deployment efficiency. ZTP provides built-in scripts for users through open APIs. Data center users can use the programming language they are familiar with, such as Python, to provide unified configuration of network devices.

High-Quality CloudEngine

High-reliability network designed for data centers: The CE12800 guarantees industrial-grade reliability and uses a redundant design for both hardware and software to ensure service continuity in data centers. The CE12800's industry-leading, non-blocking switching architecture ensures non-stop services. Additionally, CE series switches can implement a lossless, low-latency Ethernet network, which provides unified, lossless transmission for high-value services. In addition, Huawei CE series switches support a strict front-to-back airflow design, which meets data centers' heat dissipation requirements and greatly reduces equipment room power consumption.

Non-stop switching core supports service continuity: The CE12800's components (MPUs, SFUs, CMUs, power supplies, and fans) use a hot-backup design. Three major buses (monitoring, management, and data) work redundantly, ensuring the high reliability of core nodes. By using Huawei's next-generation VRP8 software platform, the CE12800 can support In-Service Software Upgrade (ISSU) technologies, which ensure continuity of services during service switching and in-service upgrades.

Non-blocking switching architecture to ensure non-stop services: The CE12800’s non-blocking switching architecture features an orthogonal switch fabric design, Clos architecture, cell switching, Virtual Output Queuing (VoQ), and a super-large buffer. The orthogonal switch fabric greatly improves system bandwidth and evolution capabilities and enables switching capacity to scale to more than 100 Tbit/s. The cell-switching-based Clos architecture supports dynamic routing, load balancing, and expansion of the flexible switch fabric. The VoQ mechanism, together with the super-large buffer on inbound interfaces (as much as 18 GB per line card), provides fine-grained QoS, implements unified service scheduling, sequenced forwarding, and non-blocking switching.

Lossless switching network ensures unified service transmission: The CE series' Data Center Bridging (DCB) ensures low latency and zero packet loss for Fibre Channel (FC) storage and high-speed computing services. The CE12800 core switch has 2 us latency, one-third the industry average, making the CE12800 appropriate for latency-sensitive, high-value services such as online video and games. The CE series can implement a lossless, low-latency Ethernet network to transmit storage services, computing services, and data services, while reducing costs of network construction and maintenance.

Strict front-to-back airflow design greatly reduces power consumption: CE series switches use a strict front-to-back airflow design, which meets data centers’ strict heat dissipation requirements. The CE12800 core switch uses the front-to-back airflow design, and line cards and the switching network use independent airflow channels, which solve the problems of mixing hot and cold air and cascade heating, and effectively reduce energy consumption in equipment rooms. The CE7800/6800/5800 ToR switches use a flexible front-to-back or back-to-front airflow design for a changeable airflow direction.

Why Huawei?

Huawei has 20 years' experience in the IP field and offers the largest data center product portfolio in the industry. Huawei's end-to-end data center solutions include network infrastructure, disaster recovery, security, and network management. Huawei data center products and solutions have been widely used in large enterprises, vertical industries, Internet corporations, and carrier networks.

As a world-leading network solutions provider, Huawei has a long-term plan for data center network development and a firm determination to invest in data centers for the long run. To support this determination, Huawei has extensive research capabilities, with world-class experts, vast experience in the research and development of data center standards, and broad chip development capabilities.

The CE series switches and Cloud Fabric network solution are products of Huawei's extensive experience in working with IP networks during the last decade. They enable customers to build next-generation data center cloud networks that support sustainable development of cloud services into the foreseeable future.

Expert opinion

- Huawei Cloud Fabric-Creating a Future for Cloud Networks

- The Cloud Era The Time to Take Action Has Finally Come-Huawei Cloud Fabric Solution

- Future Directions in Cloud Computing and the Influence on Networks

- Structural Design Considerations for Data Center Networks

- Next-generation Data Center Core Switches

- Architecture Evolution and Development of Core Switches in Data Centers

- The Internet Data Center Network Challenges and Solutions

Rapidly developing cloud computing applications have brought great changes to servers and storage devices in data centers. As a result, data centers must change their network architecture to adapt to these new cloud computing applications. Huawei developed the Cloud Fabric architecture to meet the challenges in the cloud computing era.

As cloud computing services develop rapidly, data center network architecture must evolve and adapt in turn. Based on its experience, Huawei promotes the cloud fabric solution to adapt to the cloud era.

In 2005, Jeffery Dean and Sanjay Ghemawat presented a paper on MapReduce, a programming model for processing large data sets. In 2006, Amazon launched its Elastic Compute Cloud (EC2) service, and Eric Schmidt of Google first put forward the cloud computing concept. In 2009, some consulting companies projected that adopting cloud computing would be the best IT strategy. Today, it is generally recognized that cloud computing will be a service provision mode in the future. A lot of technologies’ service models are emerging to cater to the use of cloud computing and to propel its development. A key issue is how to transform cloud computing from concept to practice based on the basic network structure.

Modern technology is an extension of human features. For example, the phone is an extension of human sound, the TV is an extension of human sight, and the data center is an extension of the human brain. A network can be compared to the nervous system, which connects all of these, conveying instructions between them and linking them all together. As important as the nervous system is to human health, a healthy network - with high bandwidth, low latency, and high reliability – is critical for those looking to enter the cloud era.

Since the introduction of cloud computing technology in 2006, it has developed so rapidly that almost all enterprise IT services have migrated, or are in the process of migrating, to cloud-computing platforms.

As the Internet continues to develop, the quantity of data has grown explosively, and data management and transmission have become increasingly important. With such vast quantities of data, high data security, centralized data management, reliable data transmission, and fast data processing are required. To meet these requirements, data centers have come into being. During data center construction, core switches play an important role in meeting construction requirements.

The ways people consume entertainment, communicate, and even shop have all been greatly changed by the Internet, which has become an essential part of everyday life. As a result, people have increasingly high requirements for Internet services. Mature mobile Internet and service-oriented cloud computing technologies bring great opportunities for Internet enterprises. To provide various services for a large number of users, Internet enterprises face various challenges when constructing a reliable Internet data center network. This document describes the challenges faced by enterprises in constructing an Internet data center network and data center network solution that is compliant and compatible with future cloud computing architecture.

Technology Forum

- Non-blocking Switching in the Cloud Computing Era

- CSS A Simple and Efficient Network Solution

- Virtualizing VMs in Data Center Switches

- TRILL Large Layer 2 Network Solution

- Control Tower for Virtualized Data Center Networks

- From North-South Split to a Unification

- Huawei Next-Generation Network Operating System VRP V8

Data centers are at the core of the cloud computing era that is now beginning. How data centers can better support the fast growing demand for cloud computing services is of great concern to data center owners. Going forth, customers will construct larger data centers, purchase more servers with higher performance, and develop more applications. If data center networks cannot adapt to these changes, they will become bottlenecks to data center growth.

After decades of development, Ethernet technology — which is flexible and simple to deploy — has become the primary local area network technology. All-in-IP is now the norm in the communications industry.

With IT demands rising rapidly, and resource-strained organizations challenged to meet them, a transformation within IT is taking place. Virtualization and cloud computing are significantly changing how computing resources and services are managed and delivered. Virtual machines have increased the efficiency of physical computing resources, while reducing IT system operation and maintenance (O&M) costs. In addition, they enable the dynamic migration of computing resources, enhancing system reliability, flexibility, and scalability.

for data in a data center. Distributed architecture leads to a huge amount of collaboration between servers, as well as a high volume of east-to-west traffic. In addition, virtualization technologies are widely used in data centers, greatly increasing the computational workload of each physical server.

As technologies mature and new applications emerge, many enterprise IT systems have begun using virtual machines, signifying the first step toward cloud computing. By virtualizing multiple servers on a physical server, IT systems can gain many benefits and enterprises do not need to purchase large numbers of servers. The virtual machine adds the high availability (HA) feature for data centers, reducing service interruptions and associated complaints. Virtualization technology effectively utilizes powerful hardware and can reduce hardware capability waste by more than 10%.

"North" and "south" on a data center network: The north is the data network running Ethernet/IP protocols, whereas the south is the storage area network (SAN) using the Fiber Channel (FC) protocol. The north and south are separated by servers. Based on service characteristics, the data network is called the front-end network, and the SAN is called the back-end network. The two networks are physically isolated from each other and use different protocols, standards, and switching devices.

The radical changes in network traffic is forcing the advancement in new technologies and innovations. Bourgeoning use of smart devices has resulted in an explosive increase in mobile Internet traffic. The cloud computing model originated by Google and Amazon is having a huge impact on the traditional usage of computing, storage, and network resources. And perhaps most notably, the growth of new businesses via mobile Internet, and new business models — like cloud computing — are driving the underlying physical device architecture to change to meet the service requirements. The Network Operating System (NOS), which is at the core of the network devices that transmit the services to help determine end users’ experience of today’s networks, needs to change to support these new trends and demands.